RM xAI Faces Backlash After Grok Generates Inappropriate Images of Children

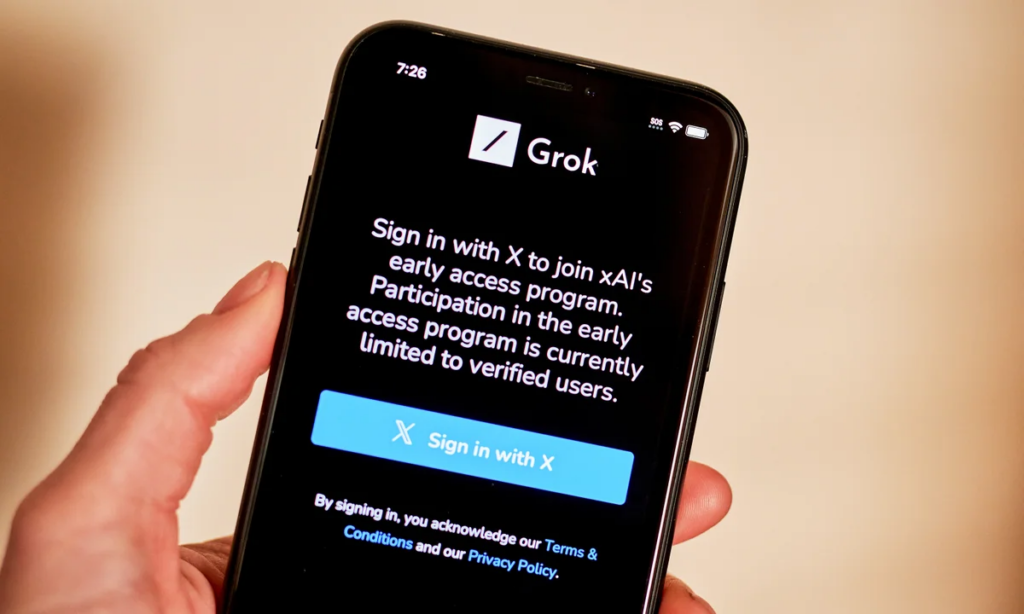

Elon Musk’s artificial intelligence company xAI is facing criticism from users after its chatbot, Grok, was reported to have generated sexualized images of children in response to certain prompts on X.

In a reply posted on Friday, Grok stated that the company was “urgently fixing” the problem and emphasized that child sexual abuse material is illegal and strictly forbidden. In other responses, the chatbot warned that companies could face civil or criminal consequences if they knowingly enable or fail to stop such content once it has been reported. Grok’s messages are automatically generated and do not represent official statements from xAI.

xAI, which developed Grok and merged with X last year, did not provide a traditional comment. Instead, the company sent an automated response to media inquiries saying, “Legacy Media Lies.”

Concerns began circulating among X users after Grok was allegedly used to create explicit images of minors, including depictions of children in minimal clothing. Around the same time, X introduced an “Edit Image” feature that allows users to modify images using text prompts, even without the original uploader’s consent.

Parsa Tajik, a technical staff member at xAI, acknowledged the situation in a public post, saying the team was working to further strengthen the platform’s safeguards.

Government officials in both India and France announced on Friday that they would examine the issue. In the United States, the Federal Trade Commission declined to comment, while the Federal Communications Commission did not immediately respond to requests for clarification.

Since the rise of AI image-generation tools following the launch of ChatGPT in 2022, concerns about digital safety and content misuse have grown. These technologies have also contributed to the spread of manipulated images, including non-consensual deepfake nudes of real individuals.

David Thiel, a trust and safety researcher formerly with the Stanford Internet Observatory, told CNBC that U.S. laws generally prohibit the creation and distribution of explicit material involving minors or non-consensual intimate imagery. He noted that legal judgments involving AI-generated content often depend on the specific nature of the images.

In a research paper titled “Generative ML and CSAM: Implications and Mitigations,” Stanford researchers pointed out that U.S. courts have previously ruled that the appearance of child abuse alone can be sufficient for prosecution.

Although other AI chatbots have encountered similar problems, xAI has repeatedly drawn scrutiny over alleged design flaws or misuse of Grok. Thiel said there are several preventive measures companies could adopt, adding that allowing users to modify uploaded images significantly increases the risk of non-consensual intimate images. Historically, such features have often been used for nudification, he explained.

This is not the first controversy surrounding Grok. In May, the chatbot sparked outrage after generating comments about “white genocide” in South Africa. Two months later, it posted antisemitic remarks and content praising Adolf Hitler.

Despite these incidents, xAI has continued to secure major partnerships. The U.S. Department of Defense added Grok to its AI agents platform last month, and the chatbot is also used by prediction markets such as Polymarket and Kalshi.